Self-supervised Video Representation Learning Using Inter-intra Contrastive Framework

Li TAO

Xueting Wang

Toshihiko Yamasaki

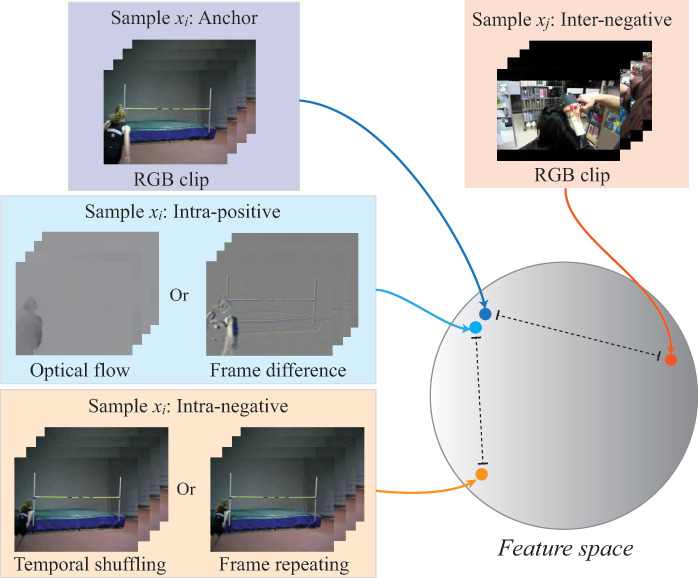

Figure 1. General idea of proposed method. Given video 𝑥𝑖 , different views of this video are treated as positives, and those features are constrained to be close to each other. Data from other videos are treated as negatives. Temporal relations in the anchor view will be broken down to generate intra-negative samples, which are also treated as negatives to help the model learn temporal information.

Abstract

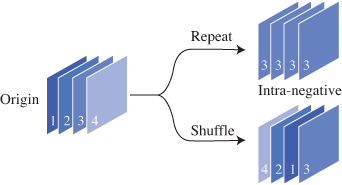

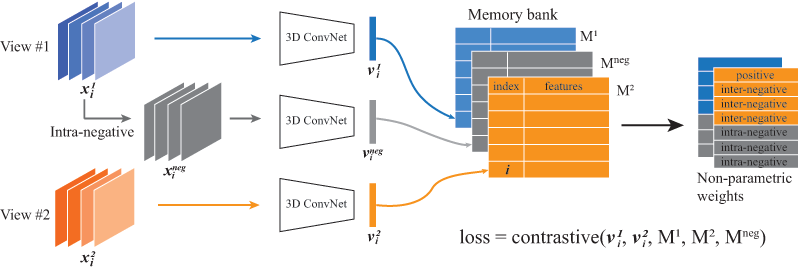

We propose a self-supervised method to learn feature representations from videos.Astandard approach in traditional self-supervised methods uses positive-negative data pairs to train with contrastive learning strategy. In such a case, different modalities of the same video are treated as positives and video clips from a different video are treated as negatives. Because the spatio-temporal information is important for video representation, we extend the negative samples by introducing intra-negative samples, which are transformed from the same anchor video by breaking temporal relations in video clips. With the proposed inter-intra contrastive framework, we can train spatio-temporal convolutional networks to learn video representations. There are many flexible options in our proposed framework and we conduct experiments by using several different configurations. Evaluations are conducted on video retrieval and video recognition tasks using the learned video representation. Our proposed methods outperform current state-of-the-art results by a large margin, such as 16.7% and 9.5% points improvements in top- 1 accuracy on UCF101 and HMDB51 datasets for video retrieval, respectively. For video recognition, improvements can also be obtained on these two benchmark datasets.

Figure 2. Two ways to generate intra-negative samples.

Figure 3. Inter-intra contrastive learning framework.